Chronic atrophic gastritis detection with a convolutional neural network considering stomach regions

Misaki Kanai, Ren Togo, Takahiro Ogawa, Miki Haseyama

Abstract

Key words: Gastric cancer risk; Chronic atrophic gastritis; Helicobacter pylori; Gastric Xray images; Deep learning; Convolutional neural network; Computer-aided diagnosis

INTRODUCTION

Gastric cancer is the third leading cause of death in all types of malignancies behind lung cancer and colorectal cancer[1]. One of the major risk factors of gastric cancer isHelicobacter pylori(H. pylori)infection[2-4]. Chronic atrophic gastritis (CAG) induced byH. pylorileads to atrophic mucosa[5], which increases the risk of gastric cancer[6]. Moreover, it has been revealed thatH. pylorieradication therapy is effective for the reduction of the risk of gastric cancer[7-9].

According to the International Agency for Research on Cancer, the number of new cases of gastric cancer in Eastern Asia accounts for more than half of those in the world. For the reduction of gastric cancer mortality, population-based screening for gastric cancer has been conducted through endoscopic and X-ray examinations in Japan[10]. Although the detection rate of early gastric cancer by endoscopic examination is higher than that by X-ray examination[11], mass screening using endoscopic examination has some problems,e.g., a limit to the number of patients who can be examined[12]. Therefore, in Japan, endoscopic examination is often performed for cases in which something unusual is found by X-ray examination[13]. However, the interpretation of gastric X-ray images (GXIs) requires sufficient experience and knowledge, and there is a shortage of doctors who are skilled in diagnosis[10]. The development of computer-aided diagnosis (CAD) systems is needed to help doctors who do not have sufficient experience and knowledge.

For realizing CAD systems, researchers have been exploring methods for CAG detection from GXIs[14-17]. In early works, attempts were made to describe the visual features of CAG with mathematical models[14,15]. For more accurate detection, in the papers[16,17], we have tried to introduce convolutional neural networks (CNNs)[18]since it has been reported that CNNs outperform methods with hand-crafted features in various tasks[19-21]. We adopted a CNN that was trained on patches obtained by dividing original images,i.e., a patch-based CNN, to preserve detailed textures of GXIs since they had high resolutions. In our previous investigation[17], we focused on the outside patches of the stomach since textures of these patches do not depend on the image-level ground truth (GT),i.e., CAG or non-CAG. In clinical settings, GXIs generally have only the image-level GT. Therefore, we introduced manual annotation of stomach regions for all GXIs used in training and assigned the patch-level class labels based on the image-level GT and the stomach regions. Although the previously reported method already achieved high detection performance (sensitivity: 0.986, specificity: 0.945), there remains a problem. In general, CNNs require a large number of labeled images for training to determine millions of parameters that can capture the semantic contents of images. However, manually annotating stomach regions for a large number of GXIs is time- and labor-consuming. In other words, the previous method can practically utilize only a small number of GXIs even in the case of numerous GXIs being available for training.

In this paper, we propose a novel CAG detection method that requires manual annotation of stomach regions for only a small number of GXIs. The main contribution of this paper is the effective use of stomach regions that are manually annotated for a part of GXIs used in training. We assume that distinguishing the inside and outside patches of the stomach is much easier for patch-based CNNs than distinguishing whether the patches are extracted from CAG images or non-CAG images. Therefore, we newly introduce the automatic estimation of stomach regions for non-annotated GXIs. Herewith, we can reduce the workload of manual annotation and train a patchbased CNN that considers stomach regions with all GXIs even when we manually annotate stomach regions for some of the GXIs used in training.

MATERIALS AND METHODS

The proposed method that requires manual annotation of stomach regions for only a small number of GXIs to detect CAG is presented in this section. This study was reviewed and approved by the institutional review board. Patients were not required to give informed consent to this study since the analysis used anonymous data that were obtained after each patient agreed to inspections by written consent. In this study, Kanai M from Graduate School of Information Science and Technology, Hokkaido University, Togo R from Education and Research Center for Mathematical and Data Science, Hokkaido University, Ogawa T and Haseyama M from Faculty of Information Science and Technology, Hokkaido University, took charge of the statistical analysis since they have an advanced knowledge of statistical analysis.

Study subjects

The GXIs used in this study were obtained from 815 subjects. Each subject underwent a gastric X-ray examination and an endoscopic examination at The University of Tokyo Hospital in 2010, and GXIs that had the same diagnostic results in both examinations were used in this study. Criteria for exclusion were the usage history of gastric acid suppressants, a history ofH. pylorieradication therapy, and insufficient image data. The ground truth for this study was the diagnostic results in X-ray and endoscopic examinations. In the X-ray evaluation, subjects were classified into four categories,i.e., “normal”, “mild”, “moderate” and “severe”, based on atrophic levels[22]. It should be noted that the stomach with non-CAG has straight and fine fold distributions and fine mucosal surfaces, and the stomach with CAG has non-straight and snaked folds and coarse mucosal surfaces. X-ray examination can visualize these atrophic characteristics by barium contrast medium. We show that these differences can be trained on a chronic atrophic gastritis detection model with a small number of training images in this paper. We regarded subjects whose diagnosis results were “normal” as non-CAG subjects and the other subjects as CAG subjects. In contrast, in the endoscopic examination, subjects were classified into seven categories,i.e., no atrophic change (C0), three closed types of atrophic gastritis (C1, C2, C3) and three open types of atrophic gastritis (O1, O2, O3) based on the Kimura-Takemoto sevengrade classification[23]. Since C1 is defined as the atrophic borderline, we excluded subjects whose diagnosis results were C1 from the dataset. We regarded subjects whose diagnosis results were C0 as non-CAG subjects and the other subjects as CAG subjects. As a result, we regarded 240 subjects as CAG subjects and 575 subjects as non-CAG subjects.

According to the specific condition of GXIs, the size of GXIs used in this study was 2048 × 2048 pixels with an 8-bit grayscale. Technique of fluoroscopy was a digital radiography system. Exposure was controlled by an automatic exposure control mechanism. To realize the learning of our model at the patch level, GXIs taken from the double-contrast frontal view of the stomach in the supine position were used in this study.

Preparation of dataset for training

For more accurate detection, we focus on the outside patches of the stomach since textures of these patches do not depend on the image-level GT at all. Although stomach regions can be easily determined without highly dedicated knowledge, manually annotating stomach regions for a large number of GXIs is not practical. In order to overcome this problem, we split GXIs for training into the following two groups.

(1) Manual annotation group (MAG): This group consists of GXIs for which we manually annotate the stomach regions. It is ideal for the number of GXIs in this group to be small since annotation for a large number of GXIs is time-and labor-consuming; (2) Automatic annotation group (AAG): This group consists of GXIs for which we automatically estimate the stomach regions with a CNN. By estimating the stomach regions automatically, a large number of GXIs can be used for training without the large burden of manual annotation.

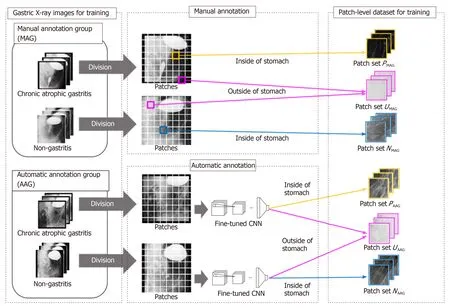

An overview of the preparation of the dataset is shown in Figure 1. The preparation of the dataset for training consists of two steps. In the first step, we annotate the stomach regions for GXIs in the MAG manually and select patches for training from GXIs in the MAG. In the second step, we estimate the stomach regions for GXIs in the AAG automatically and select patches for training from GXIs in the AAG.

First step - Patch selection for training from the MAG:Each GXI in the MAG is divided into patches. To separate the inside and outside patches of the stomach, we categorize the patches with the following three kinds of patch-level class labels (P, N, U): P: inside patches of the stomach in CAG images,i.e., positive patches; N: inside patches of the stomach in non-CAG images,i.e., negative patches; U: outside patches of the stomach in both CAG and non-CAG images,i.e., unrelated patches.

Note that if more than 80% regions within patches are included in the inside of the stomach, they were annotated as P or N. Furthermore, if less than 1% regions within patches are included in the inside of the stomach, they were annotated as U. Otherwise, we discard such patches from the training dataset. We denote a set of patches with each patch-level class label (P, N, U) asPMAG,NMAG, andUMAG. By setting the class label U, we can train a patch-based CNN that can distinguish the inside and outside patches of the stomach.

Second step - Patch selection for training from the AAG:For estimating the stomach regions for GXIs in the AAG, we introduce a fine-tuning technique that is effective when only a small number of images for training are available[24]. First, we prepare a CNN whose weights are transferred from a CNN pre-trained for image classification with a large number of labeled natural images. The number of nodes on the last fully connected layer in the CNN is altered to the number of the patch-level class labels (P, N, U). Due to this alteration, we initialize weights of the last fully connected layer with random values sampled from a uniform distribution. Next, the CNN is fine-tuned with the patches obtained in the first step to calculate the probabilitiespc(cbelong to {P,N,U}, ∑cpc= 1) of belonging to the patch-level class labelc. By transferring the weights from the pre-trained CNN, accurate prediction of the patch-level class labels is realized even when the number of GXIs in the MAG is small. Second, we estimate the stomach regions for GXIs in the AAG. We divide each GXI in the AAG into patches. By inputting the patches into the fine-tuned CNN, we calculate the probabilitiespc. We can regardpP+pNandpUas the probability of the inside of the stomach and the probability of the outside of the stomach, respectively. Therefore, we handle the patches that satisfypP+pN≥ α (0.5 < α ≤ 1) and the patches that satisfypU≥ α as the inside and outside patches of the stomach, respectively. Finally, we assign three kinds of patch-level class labels (P, N, U) for the patches based on the estimated results and the image-level GT. We denote a set of selected patches with patch-level class labels (P, N, U) asPAAG,NAAGandUAAGrespectively. By estimating the stomach regions for the AAG, we can addPAAG,NAAG, andUAAGto the dataset for training even in the case of manual annotation of the stomach regions only for the MAG.

Chronic atrophic gastritis detection

With all selected patches (P=PMAG+PAAG,N=NMAG+NAAG,U=UMAG+UAAG), we retrain the fine-tuned CNN to predict the patch-level class labels. For a GXI Xtestwhose stomach regions and GT are unknown, we estimate an image-level class labelytestbelong to {1,0} that indicates CAG or non-CAG. First, we divide the target image Xtestinto patches. We denote the patch-level class label predicted by the retrained CNN ascpredbelong to {P,N,U}. In order to eliminate the influence of patches outside the stomach, we select patches that satisfycpred= P orcpred= N for estimating the imagelevel class label. We calculate the ratio R with the selected patches as follows:R=MP/(MP+MN), whereMPandMNare the numbers of patches that satisfycpred= P andcpred= N, respectively. Finally, the image-level estimation resultytestfor the target image Xtestis obtained as follows:ytest= 1 ifR<β, otherwise,ytest= 0, where β is a predefined threshold. By selecting patches that satisfycpred= P orcpred= N, estimating the imagelevel class label without the negative effect of regions outside the stomach is feasible.

Figure 1 Overview of preparation of the dataset. CNN: Convolutional neural network; MAG: Manual annotation group; AAG: Automatic annotation group.

Evaluation of chronic atrophic gastritis detection results

A total of 815 GXIs including 200 images (100 CAG and 100 non-CAG images) for training and 615 images (140 CAG and 475 non-CAG images) for evaluation were used in this experiment. We set the number of GXIs in the MAGNMAGto {10, 20,…, 50}. Note that the numbers of CAG and non-CAG images in the MAG were both set toNMAG/2. We randomly sampled GXIs for the MAG from those for training, and the rest of them were used for the AAG. For an accurate evaluation, random sampling and calculating the performance of CAG detection were repeated five times at eachNMAG. It took approximately 24 h to perform each trial. Note that all networks were computed on a single NVIDIA GeForce RTX 2080 Ti GPU. In this study, we extracted patches of 299 × 299 pixels in size from GXIs at intervals of 50 pixels. The following parameters were used for training of the CNN model: Batch size = 32, learning rate = 0.0001, momentum = 0.9, and the number of epochs = 50. The threshold for estimating stomach regions α was set to 0.9.

The TensorFlow framework[25]was utilized for training CNNs. We utilized the Inception-v3[26]model with weights trained on ImageNet[27]for fine-tuning. To confirm the effectiveness of utilizing not only the MAG but also the AAG for training, we compared the proposed method utilizing only the MAG with the proposed method utilizing the MAG and AAG. Hereinafter, (MAG only) denotes the proposed method utilizing only the MAG, and (MAG + AAG) denotes the proposed method utilizing both the MAG and AAG.

The performance was measured by the following harmonic mean (HM) of sensitivity and specificity: HM = (2 × Sensitivity × Specificity)/( Sensitivity + Specificity).

Note that HM was obtained at thresholdβproviding the highest HM.

RESULTS

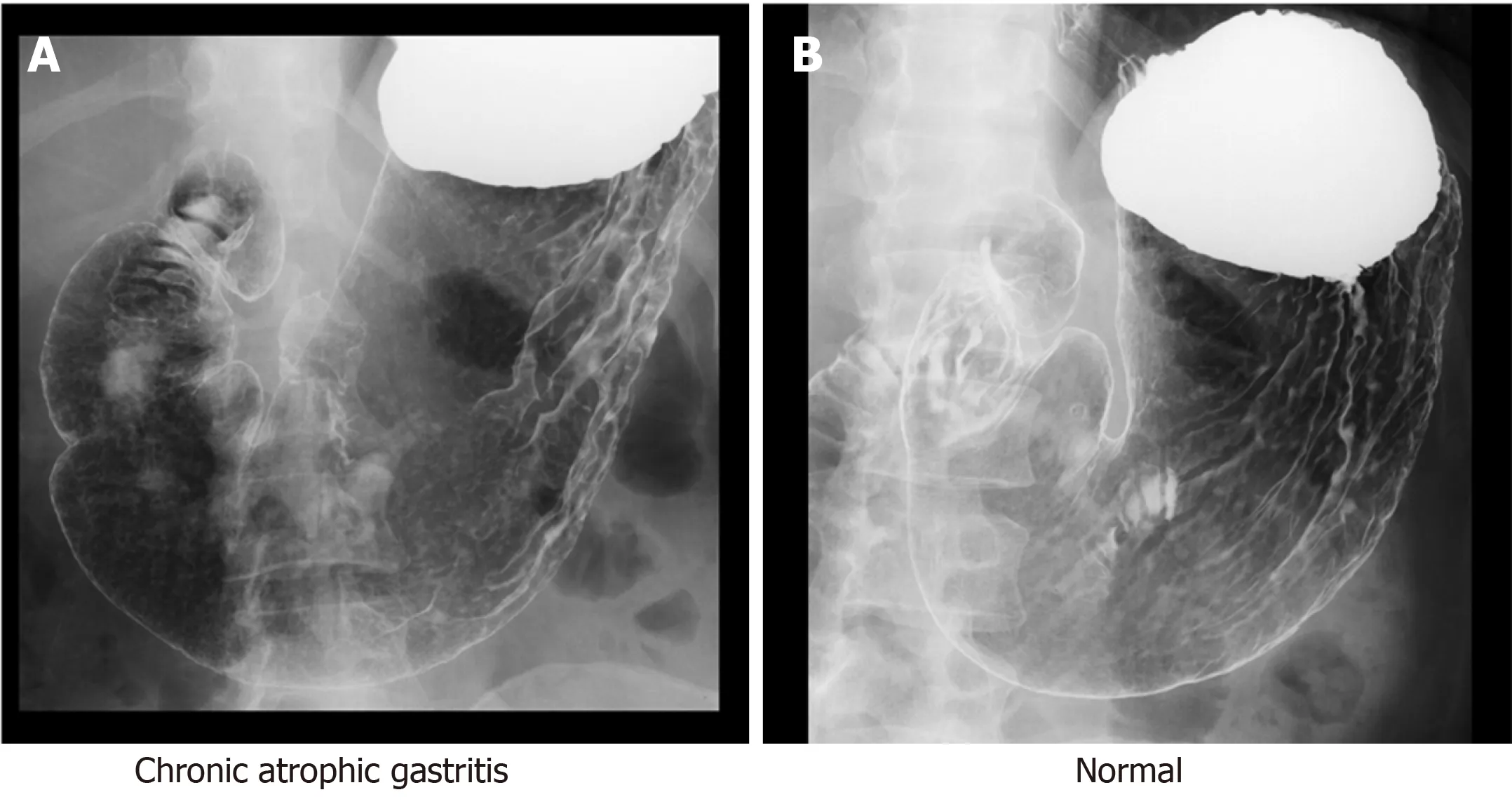

Our experimental results are shown in this section. Examples of GXIs for evaluation are shown in Figure 2. First, we evaluate the performance of the fine-tuned CNN to select patches from the AAG. Note that the fine-tuned CNN doesn’t have to distinguish with high accuracy whether the patches are extracted from CAG images or non-CAG images since we utilize the fine-tuned CNN only for estimating the stomach regions. Figure 3 shows the visualization results obtained by applying the fine-tuned CNN to the CAG image shown in Figure 2A.

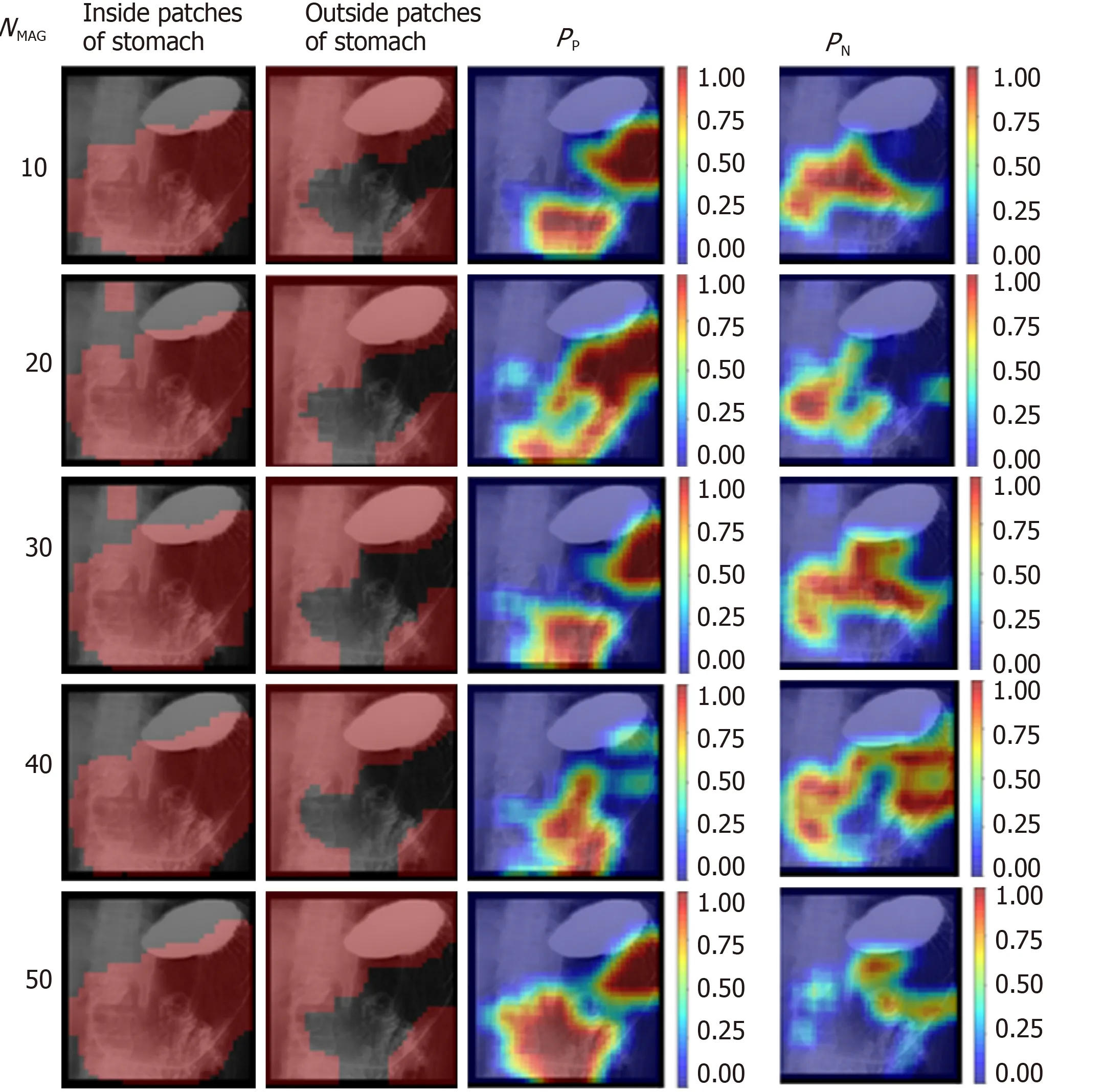

Specifically, the estimated patches of the inside and outside of the stomach and visualization ofpPandpN(i.e., calculated probabilities of belonging to the patch-level class labels P and N) are shown in Figure 3. It is notable that the inside and outside regions of the stomach partially overlapped since GXIs were divided into patches with the overlap in this experiment. As shown in Figure 3, regions whose probabilitiespPandpNare high tend to increase and decrease, respectively, asNMAG(i.e., the number of GXIs in the MAG) increases. In contrast, the stomach regions were estimated with high accuracy and the estimated stomach regions do not depend on the change ofNMAG. Therefore, it is worth utilizing the fine-tuned CNN for estimating the stomach regions.

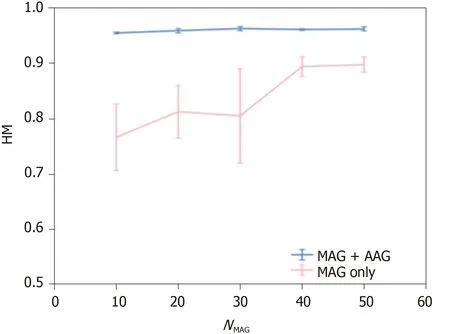

Next, we evaluate the performance of CAG detection. To confirm the effectiveness of considering stomach regions, we evaluated the detection performance of a baseline method that did not consider the stomach regions by setting patch-level class labels to the same as the image-level GT. As a result of utilizing 200 GXIs for the training, HM of the baseline method was 0.945. We show the detection performance of methods that considered the stomach regions. The detection performance of (MAG only) and that of (MAG + AAG) are shown in Figure 4. In Figure 4, HMs are shown as means ± SD of five trials. As shown in Figure 4, the mean of HM by (MAG + AAG) is higher than that by (MAG only) at eachNMAG. The standard deviation of HM by (MAG + AAG) is smaller than that by (MAG only) at eachNMAG. Therefore, the effectiveness of utilizing not only the MAG but also the AAG for the training is confirmed when the stomach regions are manually annotated for the same number of GXIs. Besides, to confirm the effect of reducing the workload of the manual annotation on detection performance, we evaluated the detection performance of a method that manually annotated the stomach regions for all GXIs used in the training (i.e.,NMAG= 200). As a result, the HM of this method was 0.965. The negative effect of reducing the workload of manual annotation is small since the mean the HM of (MAG + AAG) approaches the HM of this method even whenNMAGis small.

DISCUSSION

This study demonstrated that highly accurate detection of CAG was feasible even when we manually annotated the stomach regions for a small number of GXIs. Figure 4 (MAG only) indicates that a larger number of GXIs with annotation of the stomach regions are required to realize highly accurate detection. In contrast, a highly accurate estimation of the stomach regions is feasible even when the number of manually annotated images is limited. Therefore, highly accurate detection of CAG and reduction of the labor required for manually annotating the stomach regions are simultaneously realized by the proposed method when a large number of GXIs with the image-level GT are available for training.

The proposed method can be applied to other tasks in the field of medical image analysis since regions outside a target organ in medical images adversely affect the performance of the tasks. With only a simple annotation of regions of the target organ for a small number of medical images, the proposed method will enable accurate analysis that excludes the effect of regions outside the target organ.

In general, endoscopic examination is superior to an X-ray examination for the evaluation of CAG in imaging inspections[28]. The endoscopic examination has been recommended for gastric cancer mass screening programs in East Asian countries in recent years. For example, South Korea has started the endoscopic examination-based gastric cancer screening program since 2002, and the proportion of individuals who underwent endoscopic examination greatly increased from 31.15% in 2002 to 72.55% in 2011[28]. Also, Japan has started the endoscopic examination-based gastric cancer mass screening program in addition to an X-ray examination since 2016. However, there remains the problem that the number of individuals who can be examined in a day is limited. Hence, X-ray examination still plays an important role in gastric cancer mass screening.

Figure 2 Examples of gastric X-ray images for evaluation.

Figure 3 Visualization of the results estimated by the fine-tuned convolutional neural network to select patches from the automatic annotation group at each NMAG for the chronic atrophic gastritis image shown in Figure 2A. The inside and outside regions of the stomach overlapped since gastric X-ray images were divided into patches with the overlap in this experiment.

To realize effective gastric cancer mass screening, it is crucial to narrow down individuals who need endoscopic examination by evaluating the condition of the stomach. Then CAD systems that can provide additional information to doctors will be helpful. Particularly, our approach presented in this paper realized the construction of machine learning-based CAG detection with a small number of training images. This suggests that the CAG detection method can be trained with data from a small-scale or medium-scale hospital without a large number of medical images for training.

This study has a few limitations. First, GXIs taken from only a single angle were analyzed in this study. In general X-ray examinations, GXIs are taken from multiple angles for each patient to examine the inside of the stomach thoroughly. Therefore, the detection performance will be improved by applying the proposed method to GXIs taken from multiple angles. Furthermore, the GXIs analyzed in this study were obtained in a single medical facility. To verify versatility, the proposed method should be applied to GXIs obtained in various medical facilities.

Figure 4 Harmonic mean of the detection results obtained by changing NMAG. Results are shown as means ± SD of five trials. AAG: Automatic annotation group; MAG: Manual annotation group; HM: Harmonic mean.

In this paper, a method for CAG detection from GXIs is presented. In the proposed method, we manually annotate the stomach regions for some of the GXIs used in training and automatically estimate the stomach regions for the rest of the GXIs. By using GXIs with the stomach regions for training, the proposed method realizes accurate CAG detection that automatically excludes the effect of regions outside the stomach. Experimental results showed the effectiveness of the proposed method.

ARTICLE HIGHLIGHTS

Research perspectives

Our CAG detection method can be trained with data from a small-scale or mediumscale hospital without medical data sharing that having the risk of leakage of personal information.

ACKNOWLEDGEMENTS

Experimental data were provided by the University of Tokyo Hospital in Japan. We express our thanks to Katsuhiro Mabe of the Junpukai Health Maintenance Center, and Nobutake Yamamichi of The University of Tokyo.

World Journal of Gastroenterology2020年25期

World Journal of Gastroenterology2020年25期

- World Journal of Gastroenterology的其它文章

- TBL1XR1 induces cell proliferation and inhibit cell apoptosis by the PI3K/AKT pathway in pancreatic ductal adenocarcinoma

- Development of innovative tools for investigation of nutrient-gut interaction

- Practical review for diagnosis and clinical management of perihilar cholangiocarcinoma

- Management of nonalcoholic fatty liver disease in the Middle East

- Quality of life in patients with gastroenteropancreatic tumours: A systematic literature review

- Functionality is not an independent prognostic factor for pancreatic neuroendocrine tumors